Building AI on Polkadot: Why centralized compute is the wrong foundation

Build AI on Polkadot with verifiable data, cryptographic privacy, and native interoperability. 90% cost savings, no vendor lock-in, production-ready.

By Sepi Di•October 14, 2025

By Sepi Di•October 14, 2025

Your AWS bill isn't just expensive. It's an existential threat wrapped in convenience.

If you're building AI in 2025, you've probably hit the ceiling: training costs that balloon with every parameter, data silos that cripple model quality, privacy constraints that turn compliance into a nightmare. And then there's that nagging suspicion that one ToS change or geopolitical shift could yank away your entire stack.

Big cloud providers solved distribution, sure. But they created new problems that blockchain was actually designed to fix.

Polkadot offers something different: infrastructure where compute is decentralized, data is verifiable, privacy is cryptographic, and you're not building on someone else's permission. This isn't theoretical. Projects are shipping production AI on Polkadot right now, using technical primitives like TEEs, ZK proofs, XCM, and elastic scaling to solve problems centralized platforms can't touch.

Here's what that actually looks like.

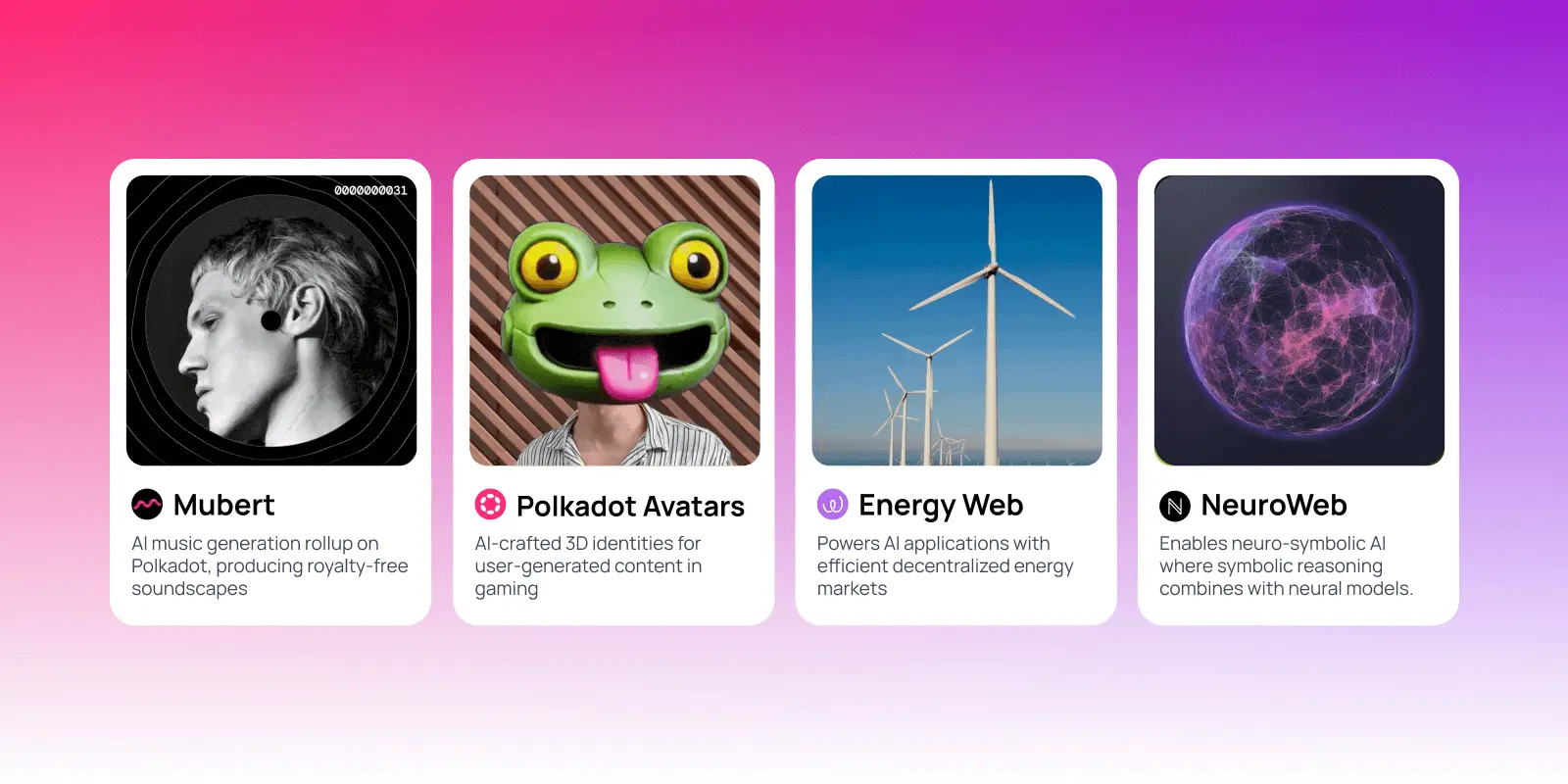

Real-world projects shipping today

Real Projects, real results. Before diving into the technical architecture, here's what's already shipping:

Mubert AI music generation rollup on Polkadot, producing infinite royalty-free soundscapes. It uses DKG for intellectual property verification, ensuring generated music doesn't inadvertently copy copyrighted material. The rollup handles compute-intensive generation while anchoring provenance on-chain.

Polkadot Avatars: AI-crafted 3D identities for user-generated content in gaming. Leverages elastic scaling for rendering spikes when new avatar drops occur, with identity anchored via PoH to prevent bot farms.

Energy Web: Powers AI applications with efficient decentralized energy markets. AI agents optimize energy distribution based on real-time demand, with settlements handled via XCM across energy-producing parachains.

OriginTrail/NeuroWeb: Beyond data verification, enables neuro-symbolic AI where symbolic reasoning (knowledge graphs) combines with neural models. Demonstrated at MIT's DecAI Summit, topping decentralized AI projects for deepfake detection via immutable content fingerprints.These aren't proofs-of-concept. They're production systems handling real workloads. Here's how the infrastructure makes this possible.

Decentralized compute: 90% cost reduction without centralized control

Training large language models requires massive parallel processing, traditionally gated by whoever controls the data centers. Polkadot’s parallel architecture distributes compute workloads across global hardware without sacrificing security or performance.

Acurast crowdsources compute from 135,000+ smartphones worldwide, using ARM TrustZone TEEs for confidential execution. The Polkadot SDK integration lets you shard AI tasks, distribute inference across edge devices for IoT applications, or batch processing for training runs. The protocol ensures tamper-proof results with on-chain anchoring, having processed 435 million+ transactions for AI workloads.

The math? Up to 90% cheaper than centralized clouds. Scalability comes from Polkadot's Agile Coretime for bursty demands like sudden training spikes or viral inference loads.

The developer workflow: Start with Polkadot SDK to create custom rollups for AI-specific logic. Tools like PDP (Polkadot Deployment Portal) offer no-code deployment, handling data availability, RPCs, and monitoring in one click. You focus on model architecture while Polkadot handles infrastructure resilience.

Verifiable data: fixing the garbage-in, garbage-out problem

AI models are only as trustworthy as their training data. Centralized sources breed bias, manipulation, and unverifiable provenance, all of which are catastrophic for applications requiring accountability.

OriginTrail's Decentralized Knowledge Graph (DKG) on NeuroWeb organizes data into cryptographically hashed graphs anchored onchain. Each data point includes fingerprints proving origin, timestamp, and transformation history. When your model makes a claim, you can trace it back to the source with mathematical certainty. Crucial for regulated industries.

NeuroWeb has seen explosive growth: 500 million+ knowledge assets with 3.7 million TRAC recently teleported for AI training verification.

This architecture combats the "Dead Internet Theory," distinguishing human content from AI slop. Projects like Umanitek use blockchain fingerprints for supply chain verification and deepfake detection with law enforcement.

For AI developers: outputs that are provably sourced, not just accurate. That’s a requirement for enterprise adoption that centralized platforms can't guarantee.

AI swarms next: These verifiable datasets provide the coordination layer for autonomous agents that share knowledge, validate outputs, and collaborate across domains while remaining transparent and auditable.

Privacy and security: ZK Proofs and TEEs for compliant AI

Handling personal data in AI requires ironclad privacy to comply with GDPR, HIPAA, and emerging AI regulations while building user trust.

Manta Network leverages zk-SNARKs (Succinct Non-interactive Arguments of Knowledge) for onchain privacy. Use Solidity or Ink! contracts to prove computations, like model inference results, without revealing inputs. Verification completes in approximately 1 millisecond, fast enough for real-time applications.

Paired with Polkadot's shared security from 700+ validators and Nakamoto coefficient of ~185, you get robust protection that outperforms most L1s. The privacy isn't bolt-on; it's architectural.

Proof of Personhood (PoP) via KILT Protocol establishes sybil-resistant digital identities on Polkadot using Decentralized Identifiers (DIDs) and verifiable credentials. It provides a privacy-preserving way to prove human uniqueness without exposing personal data, enabling fair airdrops, governance participation, AI training data contributions, and other applications requiring human verification.

Extensions to PoP are rolling out to expand verification methods. Proof of Ink (PoI) and Proof of Video Interaction (PoVI), both launching in the coming months, add gamified and flexible identity verification options. PoI anchors one-time tattoo scans as onchain credentials, while PoVI uses AI/ML to validate short video interactions, outputting zero-knowledge proofs for privacy-preserving onchain anchoring.

For developers, these primitives enable building AI systems that prove human involvement without surveillance—critical for combating deepfakes and bot pollution in training datasets.

Interoperability: AI agents that roam free

AI agents need seamless data and asset flows across ecosystems. Polkadot's XCM v5 enables this without risky bridges or wrapped tokens, native cross-chain messaging secured by the relay chain (aka Polkadot Chain).

Build agents in Ink! (WASM smart contracts) that invoke cross-chain calls. Example: an AI trading bot on Hydration ($400 million+ TVL) pulls price feeds from Kusama, executes across multiple DEXs, and settles via async backing with sub-second finality.

Integrations like Peaq with Fetch.ai bring autonomous agents to Polkadot's DePIN ecosystem, automating tasks like optimizing robot fleets or coordinating sensor networks. Use Agile Coretime for on-demand scaling. Rent blockspace during high-activity periods, release during idle times. Pay only for what you use.

The technical advantage: XCM supports memory-optimized messages, ideal for agent coordination. Serialize agent states as protocol buffers, transmit via parachain slots, and maintain state consistency across chains without custom bridge infrastructure.

This enables DeFAI (Decentralized Finance + AI), where agents handle yield optimization, risk assessment, or portfolio rebalancing autonomously across multiple chains—complexity that would require weeks of bridge security audits on other platforms, handled natively on Polkadot.

Scalability with JAM and Polkadot 2.0: vertical growth without compromise

Polkadot 2.0 went live in August 2025, pushing throughput to 623,000+ transactions per second via async backing and elastic scaling. This isn't theoretical; it's production capacity handling real workloads today.

JAM (Join-Accumulate Machine), rolling out soon, separates execution from consensus for true vertical scalability. AI services run as "core-time" rentals supporting millions of TPS with exabyte-level data availability. Perfect for data-heavy AI applications without the decentralization trade-offs that plague other high-throughput chains.

For developers, this means:

- Bursty workload handling: Training runs that spike compute needs scale automatically

- Real-time inference: Sub-second finality keeps user experiences smooth

- Massive datasets: Exabyte DA enables training on web-scale corpora without centralized storage

The architecture uses erasure coding and random sampling for data availability proofs, ensuring verifiability without requiring every node to store everything—critical for AI applications generating terabytes daily.

Governance and funding: community-driven AI development

OpenGov's Treasury funds AI initiatives directly, no VC gatekeepers, no equity dilution. Recent examples:

- $25,000 for Project DAVOS: AI delegate matcher improving governance participation

- Clarys.ai: Governance tools using natural language processing

- CyberGov: AI agents (GPT-4, Gemini, Grok) reviewing proposals, achieving 2/3 consensus in minutes, the first AI onchain votes in blockchain history

The dual-track system (Root for major changes, Whitelisted for fast reviews) lets developers pitch AI projects directly to 1.49 million+ active participants. Proposals get real community scrutiny, and successful projects receive ongoing funding as they hit milestones.

This creates sustainable development paths: build, demonstrate traction, secure additional funding, scale, all without sacrificing ownership or control.

Getting started: the developer stack

Polkadot SDK: Modular framework for building custom chains. Supports EVM/Solidity for Ethereum developers, WASM/Ink! for performance-critical applications, and no-code deployment via PDP for rapid prototyping.

Testnets: Paseo network for testing XCM interactions and coretime rentals before mainnet deployment. Polkadot’s sister network, Kusama, can be used for battle-testing under real economic conditions.

Grants: Developers can submit funding proposals through Polkadot OpenGov. Several AI-focused projects have already secured Treasury support, with allocations ranging from smaller prototype funding to larger production-scale deployments.

Integration libraries: Rust crates for Substrate development, JavaScript SDKs for frontend integration, and comprehensive documentation at docs.polkadot.com covering everything from basic chain setup to advanced XCM routing.

The bottom line

Polkadot isn't perfect. Adoption lags hype-driven chains, and the learning curve for Substrate development is steeper than forking an EVM template. The ecosystem is smaller than Ethereum's, meaning fewer off-the-shelf integrations and more custom tooling.

But for AI developers serious about decentralization and scalability, where verifiability matters, privacy is non-negotiable, and vendor lock-in is unacceptable? Polkadot's technical foundation makes rational sense. The focus on shared security eliminates bootstrapping costs. Native interoperability removes bridge risks. Elastic scaling handles real-world workload variance.

Start small: Deploy a prototype rollup handling inference for a specific model. Test privacy primitives with a federated learning experiment. Query DKG for verifiable training data. The ecosystem's maturity in 2025 positions it well for AI's next wave because the infrastructure actually works.

The question isn't whether centralized clouds can scale AI. They obviously can. The question is whether you want to build the future of intelligence on infrastructure you don't control, with data you can't verify, under terms that change arbitrarily.

Polkadot offers an alternative. It's harder initially. It's more technically demanding. But it's infrastructure designed for systems that need to outlast their creators. Which is exactly what transformative AI requires.

The tools are production-ready. The community is funding builders. The real question is whether you’re ready to build AI that’s truly decentralized. At Magenta Labs, we’re here to support you on that journey.

About the author

Sepi Di is the Growth Lead at Magenta Labs with 7+ years in product and marketing, applying that background to drive growth in Web3. A long-time degen, Sepi is passionate about scaling communities and empowering builders to create lasting impact.